Refer the below docs for pre upgrade considerations:

--> Script to Collect DB Upgrade/Migrate Diagnostic Information (dbupgdiag.sql) (Doc ID 556610.1)

--> Things to Consider Before Upgrading to 11.2.0.3/11.2.0.4 Grid Infrastructure/ASM (Doc ID 1363369.1)

Make sure all CRS components are healthy before you start the Upgrade.

[grid@node1 grid] crsctl stat res -t | tee /home/grid/logs/crsctl_stat_p_bef_patch.txt

<= Is anything other than gsd OFFLINE ?

[grid@node1 grid] crsctl query crs activeversion | tee /home/grid/logs/crsversion_bef_patch.txt

Oracle Clusterware active version on the cluster is [11.2.0.3.0]

[grid@node1 grid] crsctl query crs softwareversion | tee /home/grid/logs/crsversion_software_bef_patch.txt

Oracle Clusterware version on node [node1] is [11.2.0.3.0]

[grid@node1 grid] crsctl stat res -p | tee /home/grid/logs/crs_stat_p_bef_patch.txt

[grid@node1 grid] crsctl query css votedisk | tee /home/grid/logs/qry_css_bef_patch.txt

[grid@node1 grid] ocrcheck | tee /home/grid/logs/ocrchk_bef_patch.txt

[grid@node1 grid] crsctl check cluster -all | tee /home/grid/logs/check_cluster.txt

--> Things to Consider Before Upgrading to 11.2.0.3/11.2.0.4 Grid Infrastructure/ASM (Doc ID 1363369.1)

Make sure all CRS components are healthy before you start the Upgrade.

[grid@node1 grid] crsctl stat res -t | tee /home/grid/logs/crsctl_stat_p_bef_patch.txt

<= Is anything other than gsd OFFLINE ?

[grid@node1 grid] crsctl query crs activeversion | tee /home/grid/logs/crsversion_bef_patch.txt

Oracle Clusterware active version on the cluster is [11.2.0.3.0]

[grid@node1 grid] crsctl query crs softwareversion | tee /home/grid/logs/crsversion_software_bef_patch.txt

Oracle Clusterware version on node [node1] is [11.2.0.3.0]

[grid@node1 grid] crsctl stat res -p | tee /home/grid/logs/crs_stat_p_bef_patch.txt

[grid@node1 grid] crsctl query css votedisk | tee /home/grid/logs/qry_css_bef_patch.txt

[grid@node1 grid] ocrcheck | tee /home/grid/logs/ocrchk_bef_patch.txt

[grid@node1 grid] crsctl check cluster -all | tee /home/grid/logs/check_cluster.txt

[oracle@node1 oracle] srvctl status database -d racdb | tee /home/oracle/upgrade/check_cluster.txt

[oracle@node1 oracle] srvctl config database -d racdb | tee /home/oracle/upgrade/check_cluster.txt

On both node1 and node2 make directory for 11.2.0.4 ORACLE_HOME :

mkdir -p /data01/app/11.2.0/grid_11204

root@node1 ~]# mkdir -p /data01/app/11.2.0/grid_11204

[root@node1 ~]# cd /data01/app/11.2.0

[root@node1 11.2.0]# ls -lrth grid_11204

drwxr-xr-x 2 root root 4.0K Jan 9 14:32 grid_11204

[root@node1 11.2.0]# chown -R grid:oinstall grid_11204/

[root@node1 11.2.0]# chmod -R 775 grid_11204/

[root@node1 11.2.0]# ls -lrth grid_11204

drwxrwxr-x 2 grid oinstall 4.0K Jan 9 14:32 grid_11204

[root@node1 11.2.0]#

Verify the qosadmin users :

Known bug related to qosadmin user AUTH failure : So check before upgrade only :

GI Upgrade from 11.2.0.3.6+ to 11.2.0.4 or 12.1.0.1 Fails with User(qosadmin) is deactivated. AUTH FAILURE. (Doc ID 1577072.1)

otherwise rootupgrade.sh will fail with error "Failed to perform J2EE (OC4J) Container Resource upgrade at /haclu/64bit/11.2.0.4/grid/crs/install/crsconfig_lib.pm line 9323"

[root@node1 ~]# /data01/app/11.2.0/grid_11203/bin/qosctl qosadmin -listusers

AbstractLoginModule password: ---> Default password is oracle112

oc4jadmin

JtaAdmin

qosadmin

Run Runcluvfy utility pre crs upgrade

[oracle@node1 oracle] srvctl config database -d racdb | tee /home/oracle/upgrade/check_cluster.txt

On both node1 and node2 make directory for 11.2.0.4 ORACLE_HOME :

mkdir -p /data01/app/11.2.0/grid_11204

root@node1 ~]# mkdir -p /data01/app/11.2.0/grid_11204

[root@node1 ~]# cd /data01/app/11.2.0

[root@node1 11.2.0]# ls -lrth grid_11204

drwxr-xr-x 2 root root 4.0K Jan 9 14:32 grid_11204

[root@node1 11.2.0]# chown -R grid:oinstall grid_11204/

[root@node1 11.2.0]# chmod -R 775 grid_11204/

[root@node1 11.2.0]# ls -lrth grid_11204

drwxrwxr-x 2 grid oinstall 4.0K Jan 9 14:32 grid_11204

[root@node1 11.2.0]#

Verify the qosadmin users :

Known bug related to qosadmin user AUTH failure : So check before upgrade only :

GI Upgrade from 11.2.0.3.6+ to 11.2.0.4 or 12.1.0.1 Fails with User(qosadmin) is deactivated. AUTH FAILURE. (Doc ID 1577072.1)

otherwise rootupgrade.sh will fail with error "Failed to perform J2EE (OC4J) Container Resource upgrade at /haclu/64bit/11.2.0.4/grid/crs/install/crsconfig_lib.pm line 9323"

[root@node1 ~]# /data01/app/11.2.0/grid_11203/bin/qosctl qosadmin -listusers

AbstractLoginModule password: ---> Default password is oracle112

oc4jadmin

JtaAdmin

qosadmin

Run Runcluvfy utility pre crs upgrade

./runcluvfy.sh stage -pre crsinst -upgrade -n node1,node2 -rolling -src_crshome /data01/app/11.2.0/grid_11203 -dest_crshome /data01/app/11.2.0/grid_11204 -dest_version 11.2.0.4.0 -fixup -fixupdir /home/grid/logs -verbose | tee /home/grid/logs/runcluvfy.out

***Check for failed parameters in the log file :

[root@node1 logs]# cat runcluvfy.out |grep -i failed

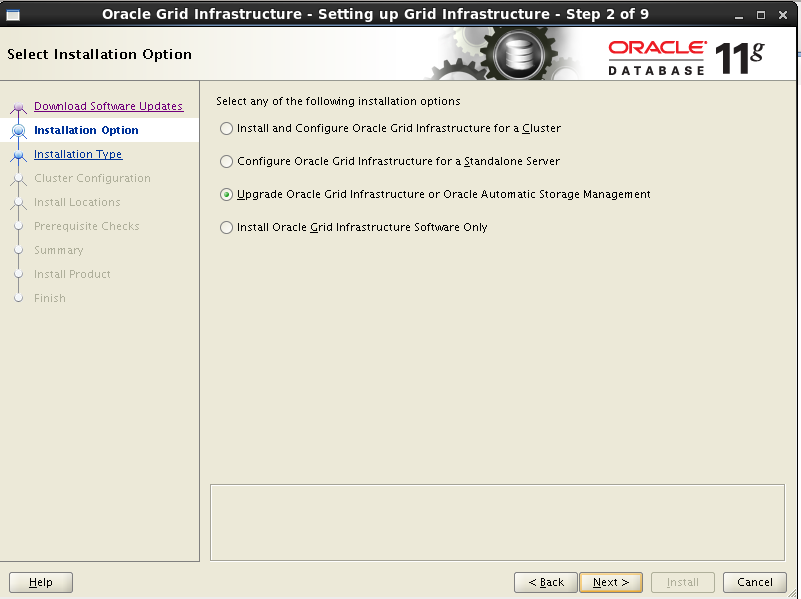

Unset ORACLE env. variables and start the runInstaller or you can use silent installation option .

For silent upgrade response file is provided at the end of this article. I am using the GUI runInstaller in this demo.

unset ORACLE_HOME

unset ORACLE_BASE

unset ORACLE_SID

Now start runInstaller GUI using the grid user :

Solution to the above issue:

Password connectivity Issue: 11.2.0.4 runInstaller: [INS-06006] Passwordless SSH connectivity not set up between the following nodes(s) (Doc ID 1597212.1)

Solution : Check hostname update node list

set up ssh connectivity using sshsetup script

[grid@node1 ~]$ /data01/app/11.2.0/grid_11203/oui/bin/runInstaller -updateNodelist ORACLE_HOME=/data01/app/11.2.0/grid_11203 "CLUSTER_NODES={node1,node2}" CRS=true

unset ORACLE_HOME

unset ORACLE_BASE

unset ORACLE_SID

Now start runInstaller GUI using the grid user :

Solution to the above issue:

Password connectivity Issue: 11.2.0.4 runInstaller: [INS-06006] Passwordless SSH connectivity not set up between the following nodes(s) (Doc ID 1597212.1)

Solution : Check hostname update node list

set up ssh connectivity using sshsetup script

[grid@node1 ~]$ /data01/app/11.2.0/grid_11203/oui/bin/runInstaller -updateNodelist ORACLE_HOME=/data01/app/11.2.0/grid_11203 "CLUSTER_NODES={node1,node2}" CRS=true

Starting Oracle Universal Installer... Checking swap space: must be greater than 500 MB. Actual 1328 MB Passed The inventory pointer is located at /etc/oraInst.loc The inventory is located at /data01/app/oraInventory 'UpdateNodeList' was successful.

Node 1:

[grid@node1 ~]$

[grid@node1 sshsetup]$ sh sshUserSetup.sh -user grid -hosts node2

The output of this script is also logged into /tmp/sshUserSetup_2015-01-10-14-31-22.log

The script will setup SSH connectivity from the host node1.localdomain to all

the remote hosts. After the script is executed, the user can use SSH to run

commands on the remote hosts or copy files between this host node1.localdomain

and the remote hosts without being prompted for passwords or confirmations.

NOTE 1:

As part of the setup procedure, this script will use ssh and scp to copy

files between the local host and the remote hosts. Since the script does not

store passwords, you may be prompted for the passwords during the execution of

the script whenever ssh or scp is invoked.

NOTE 2:

AS PER SSH REQUIREMENTS, THIS SCRIPT WILL SECURE THE USER HOME DIRECTORY

AND THE .ssh DIRECTORY BY REVOKING GROUP AND WORLD WRITE PRIVILEGES TO THESE

directories.

Do you want to continue and let the script make the above mentioned changes (yes/no)?

yes

The user chose yes

THE SCRIPT WOULD ALSO BE REVOKING WRITE PERMISSIONS FOR group AND others ON THE HOME DIRECTORY FOR grid. THIS IS AN SSH REQUIREMENT.

The script would create ~grid/.ssh/config file on remote host node2. If a config file exists already at ~grid/.ssh/config, it would be backed up to ~grid/.ssh/config.backup.

Node 1:

[grid@node1 ~]$

[grid@node1 sshsetup]$ sh sshUserSetup.sh -user grid -hosts node2

The output of this script is also logged into /tmp/sshUserSetup_2015-01-10-14-31-22.log

The script will setup SSH connectivity from the host node1.localdomain to all

the remote hosts. After the script is executed, the user can use SSH to run

commands on the remote hosts or copy files between this host node1.localdomain

and the remote hosts without being prompted for passwords or confirmations.

NOTE 1:

As part of the setup procedure, this script will use ssh and scp to copy

files between the local host and the remote hosts. Since the script does not

store passwords, you may be prompted for the passwords during the execution of

the script whenever ssh or scp is invoked.

NOTE 2:

AS PER SSH REQUIREMENTS, THIS SCRIPT WILL SECURE THE USER HOME DIRECTORY

AND THE .ssh DIRECTORY BY REVOKING GROUP AND WORLD WRITE PRIVILEGES TO THESE

directories.

Do you want to continue and let the script make the above mentioned changes (yes/no)?

yes

The user chose yes

THE SCRIPT WOULD ALSO BE REVOKING WRITE PERMISSIONS FOR group AND others ON THE HOME DIRECTORY FOR grid. THIS IS AN SSH REQUIREMENT.

The script would create ~grid/.ssh/config file on remote host node2. If a config file exists already at ~grid/.ssh/config, it would be backed up to ~grid/.ssh/config.backup.

The user may be prompted for a password here since the script would be running SSH on host node2.

Warning: Permanently added 'node2,192.168.56.72' (RSA) to the list of known hosts.

grid@node2's password:

Done with creating .ssh directory and setting permissions on remote host node2.

Copying local host public key to the remote host node2

The user may be prompted for a password or passphrase here since the script would be using SCP for host node2.

grid@node2's password:

Done copying local host public key to the remote host node2

SSH setup is complete.

Verifying SSH setup

===============

The script will now run the date command on the remote nodes using ssh

to verify if ssh is setup correctly. IF THE SETUP IS CORRECTLY SETUP,

THERE SHOULD BE NO OUTPUT OTHER THAN THE DATE AND SSH SHOULD NOT ASK FOR

PASSWORDS. If you see any output other than date or are prompted for the

password, ssh is not setup correctly and you will need to resolve the

issue and set up ssh again.

The possible causes for failure could be:

1. The server settings in /etc/ssh/sshd_config file do not allow ssh

for user grid.

2. The server may have disabled public key based authentication.

3. The client public key on the server may be outdated.

4. ~grid or ~grid/.ssh on the remote host may not be owned by grid.

5. User may not have passed -shared option for shared remote users or

may be passing the -shared option for non-shared remote users.

6. If there is output in addition to the date, but no password is asked,

it may be a security alert shown as part of company policy. Append the

additional text to the

Node2

Running /usr/bin/ssh -x -l grid node2 date to verify SSH connectivity has been setup from local host to node2.

IF YOU SEE ANY OTHER OUTPUT BESIDES THE OUTPUT OF THE DATE COMMAND OR IF YOU ARE PROMPTED FOR A PASSWORD HERE, IT MEANS SSH SETUP HAS NOT BEEN SUCCESSFUL. Please note that being prompted for a passphrase may be OK but being prompted for a password is ERROR.

[grid@node1 sshsetup]$ ssh node2 date

Sat Jan 10 14:32:03 IST 2015

[grid@node1 sshsetup]$ ssh node2

Last login: Fri Jan 9 17:19:25 2015 from node1.localdomain

[grid@node2 ~]$ ssh node1 date

Sat Jan 10 14:44:05 IST 2015

Now do not setup using runinstllaler . Just click next ->.

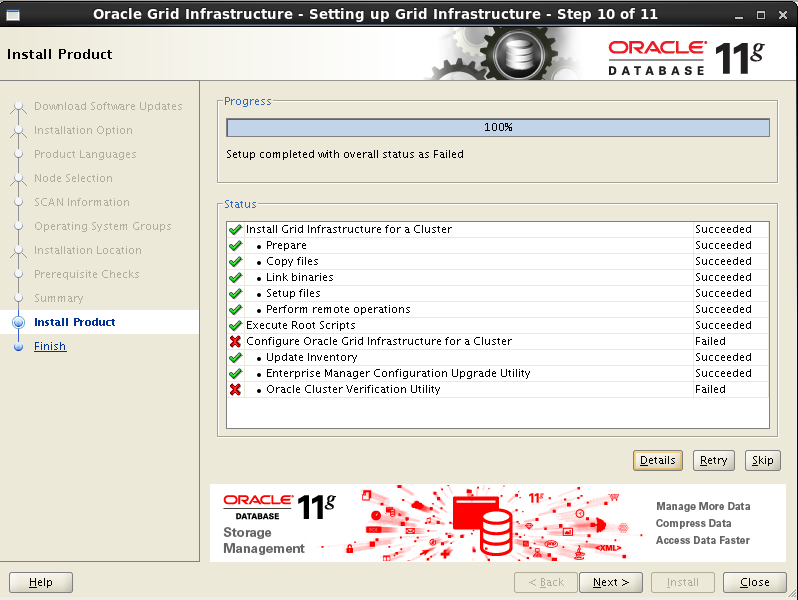

rootupgrade.sh will stop the cluster on node1 and will replace the old clusterware entries with new clusterware entries in cluster upstart. after node one .run rootupgrade.sh on node2 .

[root@node1 ~]# sh /data01/app/11.2.0/grid_11204/rootupgrade.sh

[grid@node2 ~]$ crsctl stat res -t

[grid@node1 sshsetup]$ crsctl query crs softwareversion node1

Oracle Clusterware version on node [node1] is [11.2.0.4.0]

[grid@node1 sshsetup]$ crsctl query crs softwareversion node2

Oracle Clusterware version on node [node2] is [11.2.0.3.0]

[root@node2 ~]# sh /data01/app/11.2.0/grid_11204/rootupgrade.sh

[grid@node2 ~]$ crsctl stat res -t

[grid@node1 grid]$ ps -ef|grep /data01 | grep -v java | grep -v grep

[grid@node2 ~]$ crsctl query crs activeversion

Oracle Clusterware active version on the cluster is [11.2.0.4.0]

[grid@node2 ~]$ crsctl query crs softwareversion

Oracle Clusterware version on node [node2] is [11.2.0.4.0]

[grid@node2 ~]$ crsctl query crs softwareversion node1

Oracle Clusterware version on node [node1] is [11.2.0.4.0]

[grid@node2 ~]$ crsctl query crs activeversion node1

[oracle@node1 ~]$ srvctl status database -d racdb

Instance racdb1 is running on node node1

Instance racdb2 is running on node node2

Now make changes in bash profile for grid user and enjoy :)

Response file for silent installation:

[grid@node1 grid]$ ./runInstaller -silent -showProgress -ignoreSysPrereqs -ignorePrereq

-responseFile /home/grid/grid_upgrade_to_11204.rsp

[grid@node1 ~]$ cat grid_upgrade_to_11204.rsp

See Misc A

Misc A

###############################################################################

## Copyright(c) Oracle Corporation 1998, 2013. All rights reserved. ##

## ##

## Specify values for the variables listed below to customize ##

## your installation. ##

## ##

## Each variable is associated with a comment. The comment ##

## can help to populate the variables with the appropriate ##

## values. ##

## ##

## IMPORTANT NOTE: This file contains plain text passwords and ##

## should be secured to have read permission only by oracle user ##

## or db administrator who owns this installation. ##

## ##

###############################################################################

###############################################################################

## ##

## Instructions to fill this response file ##

## To install and configure 'Grid Infrastructure for Cluster' ##

## - Fill out sections A,B,C,D,E,F and G ##

## - Fill out section G if OCR and voting disk should be placed on ASM ##

## ##

## To install and configure 'Grid Infrastructure for Standalone server' ##

## - Fill out sections A,B and G ##

## ##

## To install software for 'Grid Infrastructure' ##

## - Fill out sections A,B and C ##

## ##

## To upgrade clusterware and/or Automatic storage management of earlier ##

## releases ##

## - Fill out sections A,B,C,D and H ##

## ##

###############################################################################

#------------------------------------------------------------------------------

# Do not change the following system generated value.

#------------------------------------------------------------------------------

oracle.install.responseFileVersion=/oracle/install/rspfmt_crsinstall_response_schema_v11_2_0

###############################################################################

# #

# SECTION A - BASIC #

# #

###############################################################################

#-------------------------------------------------------------------------------

# Specify the hostname of the system as set during the install. It can be used

# to force the installation to use an alternative hostname rather than using the

# first hostname found on the system. (e.g., for systems with multiple hostnames

# and network interfaces)

#-------------------------------------------------------------------------------

ORACLE_HOSTNAME=node1.localdomain

#-------------------------------------------------------------------------------

# Specify the location which holds the inventory files.

# This is an optional parameter if installing on

# Windows based Operating System.

#-------------------------------------------------------------------------------

INVENTORY_LOCATION=/data01/app/oraInventory

#-------------------------------------------------------------------------------

# Specify the languages in which the components will be installed.

#

# en : English ja : Japanese

# fr : French ko : Korean

# ar : Arabic es : Latin American Spanish

# bn : Bengali lv : Latvian

# pt_BR: Brazilian Portuguese lt : Lithuanian

# bg : Bulgarian ms : Malay

# fr_CA: Canadian French es_MX: Mexican Spanish

# ca : Catalan no : Norwegian

# hr : Croatian pl : Polish

# cs : Czech pt : Portuguese

# da : Danish ro : Romanian

# nl : Dutch ru : Russian

# ar_EG: Egyptian zh_CN: Simplified Chinese

# en_GB: English (Great Britain) sk : Slovak

# et : Estonian sl : Slovenian

# fi : Finnish es_ES: Spanish

# de : German sv : Swedish

# el : Greek th : Thai

# iw : Hebrew zh_TW: Traditional Chinese

# hu : Hungarian tr : Turkish

# is : Icelandic uk : Ukrainian

# in : Indonesian vi : Vietnamese

# it : Italian

#

# all_langs : All languages

#

# Specify value as the following to select any of the languages.

# Example : SELECTED_LANGUAGES=en,fr,ja

#

# Specify value as the following to select all the languages.

# Example : SELECTED_LANGUAGES=all_langs

#-------------------------------------------------------------------------------

SELECTED_LANGUAGES=en

#-------------------------------------------------------------------------------

# Specify the installation option.

# Allowed values: CRS_CONFIG or HA_CONFIG or UPGRADE or CRS_SWONLY

# - CRS_CONFIG : To configure Grid Infrastructure for cluster

# - HA_CONFIG : To configure Grid Infrastructure for stand alone server

# - UPGRADE : To upgrade clusterware software of earlier release

# - CRS_SWONLY : To install clusterware files only (can be configured for cluster

# or stand alone server later)

#-------------------------------------------------------------------------------

oracle.install.option=UPGRADE

#-------------------------------------------------------------------------------

# Specify the complete path of the Oracle Base.

#-------------------------------------------------------------------------------

ORACLE_BASE=/data01/app/grid

#-------------------------------------------------------------------------------

# Specify the complete path of the Oracle Home.

#-------------------------------------------------------------------------------

ORACLE_HOME=/data01/app/11.2.0/grid_11204

################################################################################

# #

# SECTION B - GROUPS #

# #

# The following three groups need to be assigned for all GI installations. #

# OSDBA and OSOPER can be the same or different. OSASM must be different #

# than the other two. #

# The value to be specified for OSDBA, OSOPER and OSASM group is only for #

# Unix based Operating System. #

# #

################################################################################

#-------------------------------------------------------------------------------

# The DBA_GROUP is the OS group which is to be granted OSDBA privileges.

#-------------------------------------------------------------------------------

oracle.install.asm.OSDBA=asmdba

#-------------------------------------------------------------------------------

# The OPER_GROUP is the OS group which is to be granted OSOPER privileges.

# The value to be specified for OSOPER group is optional.

#-------------------------------------------------------------------------------

oracle.install.asm.OSOPER=asmoper

#-------------------------------------------------------------------------------

# The OSASM_GROUP is the OS group which is to be granted OSASM privileges. This

# must be different than the previous two.

#-------------------------------------------------------------------------------

oracle.install.asm.OSASM=asmdba

################################################################################

# #

# SECTION C - SCAN #

# #

################################################################################

#-------------------------------------------------------------------------------

# Specify a name for SCAN

#-------------------------------------------------------------------------------

oracle.install.crs.config.gpnp.scanName=

#-------------------------------------------------------------------------------

# Specify a unused port number for SCAN service

#-------------------------------------------------------------------------------

oracle.install.crs.config.gpnp.scanPort=

################################################################################

# #

# SECTION D - CLUSTER & GNS #

# #

################################################################################

#-------------------------------------------------------------------------------

# Specify a name for the Cluster you are creating.

#

# The maximum length allowed for clustername is 15 characters. The name can be

# any combination of lower and uppercase alphabets (A - Z), (0 - 9), hyphen(-)

# and underscore(_).

#-------------------------------------------------------------------------------

oracle.install.crs.config.clusterName=node-cluster

#-------------------------------------------------------------------------------

# Specify 'true' if you would like to configure Grid Naming Service(GNS), else

# specify 'false'

#-------------------------------------------------------------------------------

oracle.install.crs.config.gpnp.configureGNS=false

#-------------------------------------------------------------------------------

# Applicable only if you choose to configure GNS

# Specify the GNS subdomain and an unused virtual hostname for GNS service

# Additionally you may also specify if VIPs have to be autoconfigured

#

# Value for oracle.install.crs.config.autoConfigureClusterNodeVIP should be true

# if GNS is being configured(oracle.install.crs.config.gpnp.configureGNS), false

# otherwise.

#-------------------------------------------------------------------------------

oracle.install.crs.config.gpnp.gnsSubDomain=

oracle.install.crs.config.gpnp.gnsVIPAddress=

oracle.install.crs.config.autoConfigureClusterNodeVIP=false

#-------------------------------------------------------------------------------

# Specify a list of public node names, and virtual hostnames that have to be

# part of the cluster.

#

# The list should a comma-separated list of nodes. Each entry in the list

# should be a colon-separated string that contains 2 fields.

#

# The fields should be ordered as follows:

# 1. The first field is for public node name.

# 2. The second field is for virtual host name

# (specify as AUTO if you have chosen 'auto configure for VIP'

# i.e. autoConfigureClusterNodeVIP=true)

#

# Example: oracle.install.crs.config.clusterNodes=node1:node1-vip,node2:node2-vip

#-------------------------------------------------------------------------------

oracle.install.crs.config.clusterNodes=node1:,node2:

#-------------------------------------------------------------------------------

# The value should be a comma separated strings where each string is as shown below

# InterfaceName:SubnetAddress:InterfaceType

# where InterfaceType can be either "1", "2", or "3"

# (1 indicates public, 2 indicates private, and 3 indicates the interface is not used)

#

# For example: eth0:140.87.24.0:1,eth1:10.2.1.0:2,eth2:140.87.52.0:3

#

#-------------------------------------------------------------------------------

oracle.install.crs.config.networkInterfaceList=

################################################################################

# #

# SECTION E - STORAGE #

# #

################################################################################

#-------------------------------------------------------------------------------

# Specify the type of storage to use for Oracle Cluster Registry(OCR) and Voting

# Disks files

# - ASM_STORAGE

# - FILE_SYSTEM_STORAGE

#-------------------------------------------------------------------------------

oracle.install.crs.config.storageOption=

#-------------------------------------------------------------------------------

# THIS PROPERTY NEEDS TO BE FILLED ONLY IN CASE OF WINDOWS INSTALL.

# Specify a comma separated list of strings where each string is as shown below:

# Disk Number:Partition Number:Drive Letter:Format Option

# The Disk Number and Partition Number should refer to the location which has to

# be formatted. The Drive Letter should refer to the drive letter that has to be

# assigned. "Format Option" can be either of the following -

# - SOFTWARE : Format to place software binaries.

# - DATA : Format to place the OCR/VDSK files.

#

# For example: 1:2:P:DATA,1:3:Q:SOFTWARE,1:4:R:DATA,1:5:S:DATA

#

#-------------------------------------------------------------------------------

oracle.install.crs.config.sharedFileSystemStorage.diskDriveMapping=

#-------------------------------------------------------------------------------

# These properties are applicable only if FILE_SYSTEM_STORAGE is chosen for

# storing OCR and voting disk

# Specify the location(s) and redundancy for OCR and voting disks

# Multiple locations can be specified, separated by commas.

# In case of windows, mention the drive location that is specified to be

# formatted for DATA in the above property.

# Redundancy can be one of these:

# EXTERNAL - one(1) location should be specified for OCR and voting disk

# NORMAL - three(3) locations should be specified for OCR and voting disk

# Example:

# For Unix based Operating System:

# oracle.install.crs.config.sharedFileSystemStorage.votingDiskLocations=/oradbocfs/storage/vdsk1,/oradbocfs/storage/vdsk2,/oradbocfs/storage/vdsk3

# oracle.install.crs.config.sharedFileSystemStorage.ocrLocations=/oradbocfs/storage/ocr1,/oradbocfs/storage/ocr2,/oradbocfs/storage/ocr3

# For Windows based Operating System:

# oracle.install.crs.config.sharedFileSystemStorage.votingDiskLocations=P:\vdsk1,R:\vdsk2,S:\vdsk3

# oracle.install.crs.config.sharedFileSystemStorage.ocrLocations=P:\ocr1,R:\ocr2,S:\ocr3

#-------------------------------------------------------------------------------

oracle.install.crs.config.sharedFileSystemStorage.votingDiskLocations=

oracle.install.crs.config.sharedFileSystemStorage.votingDiskRedundancy=NORMAL

oracle.install.crs.config.sharedFileSystemStorage.ocrLocations=

oracle.install.crs.config.sharedFileSystemStorage.ocrRedundancy=NORMAL

################################################################################

# #

# SECTION F - IPMI #

# #

################################################################################

#-------------------------------------------------------------------------------

# Specify 'true' if you would like to configure Intelligent Power Management interface

# (IPMI), else specify 'false'

#-------------------------------------------------------------------------------

oracle.install.crs.config.useIPMI=false

#-------------------------------------------------------------------------------

# Applicable only if you choose to configure IPMI

# i.e. oracle.install.crs.config.useIPMI=true

# Specify the username and password for using IPMI service

#-------------------------------------------------------------------------------

oracle.install.crs.config.ipmi.bmcUsername=

oracle.install.crs.config.ipmi.bmcPassword=

################################################################################

# #

# SECTION G - ASM #

# #

################################################################################

#-------------------------------------------------------------------------------

# Specify a password for SYSASM user of the ASM instance

#-------------------------------------------------------------------------------

oracle.install.asm.SYSASMPassword=

#-------------------------------------------------------------------------------

# The ASM DiskGroup

#

# Example: oracle.install.asm.diskGroup.name=data

#

#-------------------------------------------------------------------------------

oracle.install.asm.diskGroup.name=

#-------------------------------------------------------------------------------

# Redundancy level to be used by ASM.

# It can be one of the following

# - NORMAL

# - HIGH

# - EXTERNAL

# Example: oracle.install.asm.diskGroup.redundancy=NORMAL

#

#-------------------------------------------------------------------------------

oracle.install.asm.diskGroup.redundancy=

#-------------------------------------------------------------------------------

# Allocation unit size to be used by ASM.

# It can be one of the following values

# - 1

# - 2

# - 4

# - 8

# - 16

# - 32

# - 64

# Example: oracle.install.asm.diskGroup.AUSize=4

# size unit is MB

#

#-------------------------------------------------------------------------------

oracle.install.asm.diskGroup.AUSize=1

#-------------------------------------------------------------------------------

# List of disks to create a ASM DiskGroup

#

# Example:

# For Unix based Operating System:

# oracle.install.asm.diskGroup.disks=/oracle/asm/disk1,/oracle/asm/disk2

# For Windows based Operating System:

# oracle.install.asm.diskGroup.disks=\\.\ORCLDISKDATA0,\\.\ORCLDISKDATA1

#

#-------------------------------------------------------------------------------

oracle.install.asm.diskGroup.disks=

#-------------------------------------------------------------------------------

# The disk discovery string to be used to discover the disks used create a ASM DiskGroup

#

# Example:

# For Unix based Operating System:

# oracle.install.asm.diskGroup.diskDiscoveryString=/oracle/asm/*

# For Windows based Operating System:

# oracle.install.asm.diskGroup.diskDiscoveryString=\\.\ORCLDISK*

#

#-------------------------------------------------------------------------------

oracle.install.asm.diskGroup.diskDiscoveryString=

#-------------------------------------------------------------------------------

# oracle.install.asm.monitorPassword=password

#-------------------------------------------------------------------------------

oracle.install.asm.monitorPassword=

################################################################################

# #

# SECTION H - UPGRADE #

# #

################################################################################

#-------------------------------------------------------------------------------

# Specify nodes for Upgrade.

# For upgrade on Windows, installer overrides the value of this parameter to include

# all the nodes of the cluster. However, the stack is upgraded one node at a time.

# Hence, this parameter may be left blank for Windows.

# Example: oracle.install.crs.upgrade.clusterNodes=node1,node2

#-------------------------------------------------------------------------------

oracle.install.crs.upgrade.clusterNodes=node1,node2

#-------------------------------------------------------------------------------

# For RAC-ASM only. oracle.install.asm.upgradeASM=true/false

# Value should be 'true' while upgrading Cluster ASM of version 11gR2(11.2.0.1.0) and above

#-------------------------------------------------------------------------------

oracle.install.asm.upgradeASM=true

#------------------------------------------------------------------------------

# Specify the auto-updates option. It can be one of the following:

# - MYORACLESUPPORT_DOWNLOAD

# - OFFLINE_UPDATES

# - SKIP_UPDATES

#------------------------------------------------------------------------------

oracle.installer.autoupdates.option=SKIP_UPDATES

#------------------------------------------------------------------------------

# In case MYORACLESUPPORT_DOWNLOAD option is chosen, specify the location where

# the updates are to be downloaded.

# In case OFFLINE_UPDATES option is chosen, specify the location where the updates

# are present.

#------------------------------------------------------------------------------

oracle.installer.autoupdates.downloadUpdatesLoc=

#------------------------------------------------------------------------------

# Specify the My Oracle Support Account Username which has the patches download privileges

# to be used for software updates.

# Example : AUTOUPDATES_MYORACLESUPPORT_USERNAME=abc@oracle.com

#------------------------------------------------------------------------------

AUTOUPDATES_MYORACLESUPPORT_USERNAME=

#------------------------------------------------------------------------------

# Specify the My Oracle Support Account Username password which has the patches download privileges

# to be used for software updates.

#

# Example : AUTOUPDATES_MYORACLESUPPORT_PASSWORD=password

#------------------------------------------------------------------------------

AUTOUPDATES_MYORACLESUPPORT_PASSWORD=

#------------------------------------------------------------------------------

# Specify the Proxy server name. Length should be greater than zero.

#

# Example : PROXY_HOST=proxy.domain.com

#------------------------------------------------------------------------------

PROXY_HOST=

#------------------------------------------------------------------------------

# Specify the proxy port number. Should be Numeric and at least 2 chars.

#

# Example : PROXY_PORT=25

#------------------------------------------------------------------------------

PROXY_PORT=0

#------------------------------------------------------------------------------

# Specify the proxy user name. Leave PROXY_USER and PROXY_PWD

# blank if your proxy server requires no authentication.

#

# Example : PROXY_USER=username

#------------------------------------------------------------------------------

PROXY_USER=

#------------------------------------------------------------------------------

# Specify the proxy password. Leave PROXY_USER and PROXY_PWD

# blank if your proxy server requires no authentication.

#

# Example : PROXY_PWD=password

#------------------------------------------------------------------------------

PROXY_PWD=

#------------------------------------------------------------------------------

# Specify the proxy realm.

#

# Example : PROXY_REALM=metalink

#------------------------------------------------------------------------------

PROXY_REALM=[grid@node1 ~]$

No comments:

Post a Comment